文章目录[隐藏]

2022人民网算法赛:微博流行度预测

比赛地址:http://data.sklccc.com/2022

开源地址:https://aistudio.baidu.com/aistudio/projectdetail/5567567

赛题介绍

互联网新媒体,特别是微博的兴起,极大地促进了信息的广泛传播。对微博信息的流行度规模作出精准预测,有利于对互联网舆情态势作出准确研判。

本次比赛提供微博传播数据集,包括一批微博数据,每条微博数据附带用户在当时的基本信息数据。参赛选手需要预测微博在指定时间的流行度。其中,流行度由微博的转发量、评论量、点赞量三者共同决定。

数据说明

数据集数据来源于新浪微博,用户Id、微博Id等信息已经过脱敏。数据集分为训练集和测试集,所有文件均为csv格式。

- userid 用户Id(字段加密)

- verified 是否微博认证

- uservip 用户类别

- userLocation 用户所在地

- userCreatetime 用户创建时间

- gender 性别

- statusesCount 用户历史发微博数

- followersCount 粉丝数

- weiboid 微博Id(字段加密)

- content 微博文字内容

- pubtime 发布时间

- ObserveTime 采集时间

- retweetNum 微博采集时间转发数量

- likeNum 微博采集时间点赞数量

- commentNum 微博采集时间评论数量

评价指标

针对每条微博的转发量、评论量、点赞量,我们采用平均绝对百分比误差(mean absolute percentage error)来评价预测效果的优劣。

步骤1:数据读取

import numpy as np import pandas as pd import matplotlib.pyplot as plt import seaborn as sns

train_data = pd.read_csv('train.data.csv', sep='t') test_data = pd.read_csv('infer.data.csv', sep='t')

train_data['content'] = train_data['content'].apply(lambda x: x.strip())

test_data['content'] = test_data['content'].apply(lambda x: x.strip())

步骤2:数据分析

首先可以对数据集进行字段分析,如每个用户平均的微博转发数据,通过数据分析可以找到挖掘特征的方向。

- 用户总个数

train_data['userid'].nunique() # 45583

- 平均每个用户的转发数据均值

train_data.groupby(['userid'])[['commentNum', 'retweetNum', 'likeNum']].mean().head(3)

| commentNum | retweetNum | likeNum | |

|---|---|---|---|

| userid | |||

| 1 | 2.750000 | 4.25 | 8.083333 |

| 2 | 0.333333 | 0.00 | 0.083333 |

| 3 | 0.000000 | 0.00 | 0.000000 |

微博内容分析

- 微博非重复内容

train_data['content'].nunique() # 1202620

- 微博内容个数统计

train_data['content'].value_counts()

所有男人都抵不住林有有这样往上贴的女人吗? 14127

“我家夫人小意柔情,以丈夫为天,我说一她从来不敢说二的!”少帅跪在搓衣板上,一脸豪气云天的说。督军府的众副官:脸是个好东西,拜托少帅您要一下! 11136

//@开心源070627:#王源德亚全球品牌代言人# #王源电视剧灿烂# 王源超话风追着雨,雨赶着风,而我陪着你,怕被挨骂的小小少年也已经长成了你想要成为的样子@TFBOYS-王源 10901

“穆先生,三年不见,我长大了,你又变了多少?”他的手肆无忌惮放在了她胸口:“是长大了不少……” 8698

“第一次?现在后悔还来的及” 她紧张却摇摇头,“我不后悔——” 8486

...

#周深加盟百赞音乐盛典# ✨ @卡布叻_周深 的歌声,像黑夜里的烛光,像朗空中的明月,像清晨的露珠,像黄昏的余晖,像亲切的问候,像甜蜜的微笑,像春天的微风,像冬日的火炉,像初恋者的心扉。浙江卫视为歌而赞的微博视频 1

#张远舞台氛围感#终于等到实力唱将张远开唱了,这一次的成都换季巡演,果然没有让我失望,曲目夏日感满满,唱跳舞台点燃现场氛围 1

真的很感谢@三叔兄 让我?️到了79个宝宝 1

#成毅南风知我意# cy #成毅傅云深# 你若不动岁月无恙,心若不动风又奈何;比心?༺ 成毅沉香如屑|应渊|唐周|南风知我意|傅云深 @成毅 1

看来还是得多发发自己要不然没得分 1

Name: content, Length: 1171028, dtype: int64

用户类别分析

- 用户类别分布

train_data['uservip'].value_counts()

-1 793157

0 548711

223633

1 34237

3 20437

7 17558

2 15706

220 8975

4 1579

200 1012

10 802

5 148

Name: uservip, dtype: int64

- 用户类别微博转发均值

train_data.groupby(['uservip'])[['commentNum', 'retweetNum', 'likeNum']].mean().head(3)

| commentNum | retweetNum | likeNum | |

|---|---|---|---|

| uservip | |||

| 2.536227 | 0.616658 | 5.713352 | |

| 0 | 6.585261 | 4.328470 | 21.470601 |

| 1 | 3.880509 | 1.139440 | 4.056781 |

用户地区分析

- 用户地区统计

train_data['userLocation'].value_counts()

529926

其他 233139

北京 86722

广东 52722

海外 43670

...

广西 崇左 1

重庆 彭水苗族土家族自治县 1

香港 葵青区 1

湖北 仙桃 1

天津 汉沽区 1

Name: userLocation, Length: 536, dtype: int64

- 按照地区统计微博转发数据

train_data.groupby(['userLocation'])[['commentNum', 'retweetNum', 'likeNum']].mean().head(3)

| commentNum | retweetNum | likeNum | |

|---|---|---|---|

| userLocation | |||

| 1.185690 | 0.340480 | 2.928094 | |

| 上海 | 6.089585 | 2.591486 | 21.749898 |

| 云南 | 3.458444 | 0.918585 | 10.504483 |

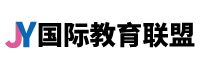

用户粉丝数

plt.figure(figsize=(6, 4))

plt.subplot(131)

sns.regplot(x='followersCount', y='commentNum', data=train_data.head(1000))

plt.subplot(132)

sns.regplot(x='followersCount', y='retweetNum', data=train_data.head(1000))

plt.subplot(133)

sns.regplot(x='followersCount', y='likeNum', data=train_data.head(1000))

plt.tight_layout()

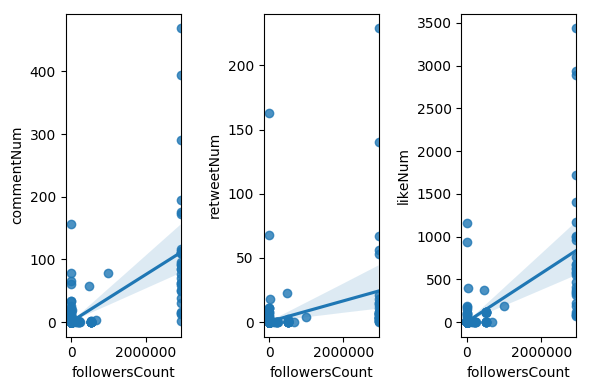

用户历史微博数

plt.figure(figsize=(6, 4))

plt.subplot(131)

sns.regplot(x='statusesCount', y='commentNum', data=train_data.head(1000))

plt.subplot(132)

sns.regplot(x='statusesCount', y='retweetNum', data=train_data.head(1000))

plt.subplot(133)

sns.regplot(x='statusesCount', y='likeNum', data=train_data.head(1000))

plt.tight_layout()

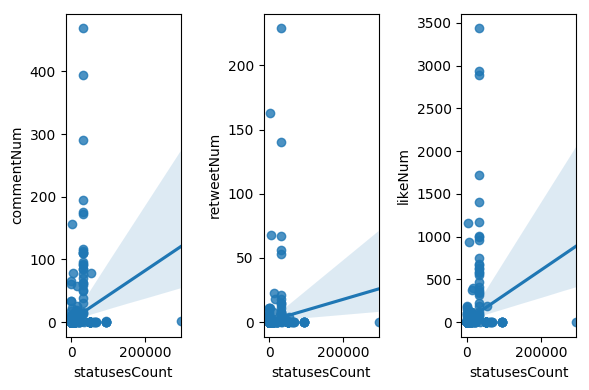

plt.figure(figsize=(6, 4))

plt.subplot(131)

sns.boxenplot(x='verified', y='commentNum', data=train_data.head(1000))

plt.subplot(132)

sns.boxenplot(x='verified', y='retweetNum', data=train_data.head(1000))

plt.subplot(133)

sns.boxenplot(x='verified', y='likeNum', data=train_data.head(1000))

plt.tight_layout()

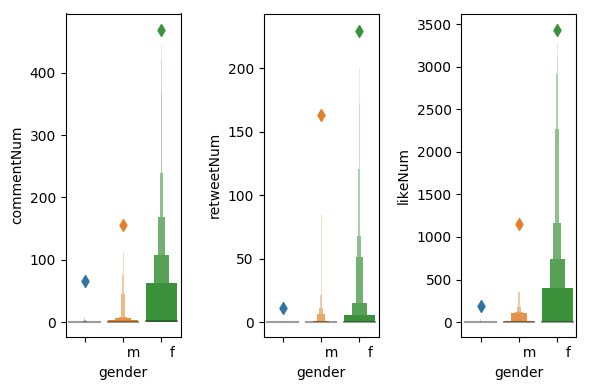

用户性别

plt.figure(figsize=(6, 4))

plt.subplot(131)

sns.boxenplot(x='gender', y='commentNum', data=train_data.head(1000))

plt.subplot(132)

sns.boxenplot(x='gender', y='retweetNum', data=train_data.head(1000))

plt.subplot(133)

sns.boxenplot(x='gender', y='likeNum', data=train_data.head(1000))

plt.tight_layout()

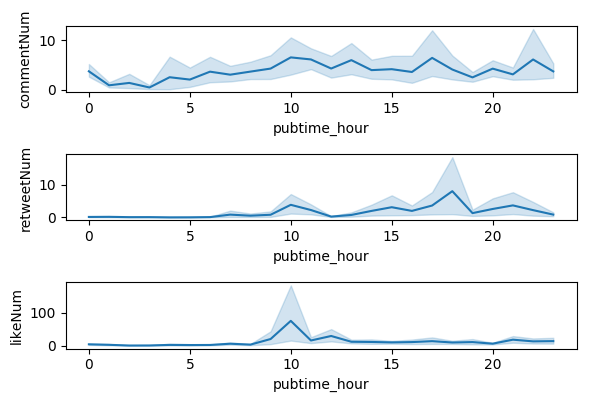

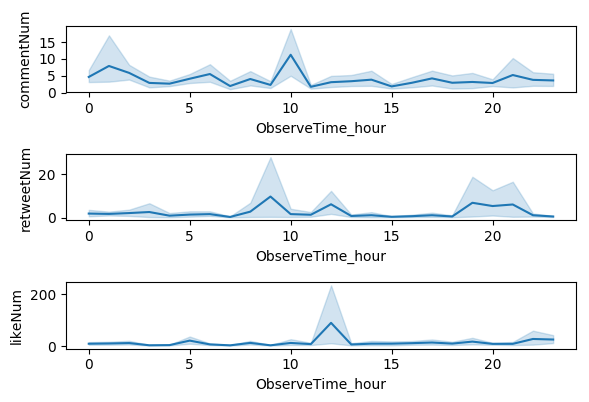

微博发布时间、观测时间

train_data['ObserveTime'] = pd.to_datetime(train_data['ObserveTime']) train_data['pubtime'] = pd.to_datetime(train_data['pubtime'])

train_data['ShowTime'] = train_data['ObserveTime'] - train_data['pubtime']

train_data['ShowTime_minute'] = train_data['ShowTime'].dt.total_seconds() // 60

train_data['pubtime_hour'] = train_data['pubtime'].dt.hour

train_data['ObserveTime_hour'] = train_data['ObserveTime'].dt.hour

plt.figure(figsize=(6, 4))

plt.subplot(311)

sns.lineplot(x='pubtime_hour', y='commentNum', data=train_data.sample(10000))

plt.subplot(312)

sns.lineplot(x='pubtime_hour', y='retweetNum', data=train_data.sample(10000))

plt.subplot(313)

sns.lineplot(x='pubtime_hour', y='likeNum', data=train_data.sample(10000))

plt.tight_layout()

plt.figure(figsize=(6, 4))

plt.subplot(311)

sns.lineplot(x='ObserveTime_hour', y='commentNum', data=train_data.sample(10000))

plt.subplot(312)

sns.lineplot(x='ObserveTime_hour', y='retweetNum', data=train_data.sample(10000))

plt.subplot(313)

sns.lineplot(x='ObserveTime_hour', y='likeNum', data=train_data.sample(10000))

plt.tight_layout()

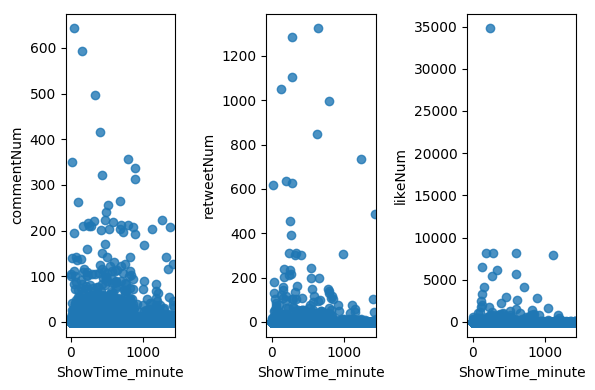

微博存活时间

plt.figure(figsize=(6, 4))

plt.subplot(131)

sns.regplot(x='ShowTime_minute', y='commentNum', data=train_data.sample(10000))

plt.subplot(132)

sns.regplot(x='ShowTime_minute', y='retweetNum', data=train_data.sample(10000))

plt.subplot(133)

sns.regplot(x='ShowTime_minute', y='likeNum', data=train_data.sample(10000))

plt.tight_layout()

步骤3:赛题思路

赛题是一个典型的数据挖掘的数据,包含了多个字段,因此需要提前对字段进行转换和编码。

特征工程

- 划分验证集

train_data = pd.read_csv('train.data.csv', sep='t') test_data = pd.read_csv('infer.data.csv', sep='t')

train_data['content'] = train_data['content'].apply(lambda x: x.strip())

test_data['content'] = test_data['content'].apply(lambda x: x.strip())

train_data = train_data.sample(frac=1.0)

train_idx = np.arange(train_data.shape[0])[:-25000]

valid_idx = np.arange(train_data.shape[0])[-25000:]

- 对用户计算target mean特征

userid_feature = train_data.iloc[train_idx].groupby(['userid'])[['commentNum', 'retweetNum', 'likeNum']].mean().reset_index()

history_count = train_data.iloc[train_idx]['userid'].value_counts().to_dict()

userid_feature['userid_history_count'] = userid_feature['userid'].map(history_count)

userid_feature.loc[

userid_feature['userid_history_count'] < 3,

['commentNum', 'retweetNum', 'likeNum']

] = train_data.iloc[train_idx][['commentNum', 'retweetNum', 'likeNum']].mean().values

userid_feature.columns = [userid_feature.columns[0]] + [x + '_userid_mean' for x in userid_feature.columns[1:]]

- 计算历史微博数据

content_feature = train_data.iloc[train_idx].groupby(['content'])[['commentNum', 'retweetNum', 'likeNum']].mean().reset_index()

history_count = train_data.iloc[train_idx]['content'].value_counts().to_dict()

content_feature['content_history_count'] = content_feature['content'].map(history_count)

content_feature.loc[

content_feature['content_history_count'] < 3,

['commentNum', 'retweetNum', 'likeNum']

] = train_data.iloc[train_idx][['commentNum', 'retweetNum', 'likeNum']].mean().values

content_feature.columns = [content_feature.columns[0]] + [x + '_content_mean' for x in content_feature.columns[1:]]

- 提取微博内容特征

#检验是否全是中文字符 def str_isall_chinese(str): for ch in str: if not u'u4e00' <= ch <= u'u9fa5': return False return True

#判断字符串是否包含中文 def str_contain_chinese(str): for ch in str: if u'u4e00'<=ch<=u'u9fff': return True return False

train_data['content_charcount'] = train_data['content'].apply(len)

train_data['content_all_ch'] = train_data['content'].apply(str_isall_chinese)

train_data['content_contain_ch'] = train_data['content'].apply(str_contain_chinese)

location_feature = train_data.iloc[train_idx].groupby(['userLocation'])[['commentNum', 'retweetNum', 'likeNum']].mean().reset_index() history_count = train_data.iloc[train_idx]['userLocation'].value_counts().to_dict() location_feature['location_history_count'] = location_feature['userLocation'].map(history_count)

location_feature.loc[

location_feature['location_history_count'] < 3,

['commentNum', 'retweetNum', 'likeNum']

] = train_data.iloc[train_idx][['commentNum', 'retweetNum', 'likeNum']].mean().values

location_feature.columns = [location_feature.columns[0]] + [x + '_location_mean' for x in location_feature.columns[1:]]

- 特征合并

train_data = pd.merge(train_data, userid_feature, on='userid', how='left') train_data = pd.merge(train_data, content_feature, on='content', how='left') train_data = pd.merge(train_data, uservip_feature, on='uservip', how='left') train_data = pd.merge(train_data, time_feature, on='pubtime_hour', how='left') train_data = pd.merge(train_data, location_feature, on='userLocation', how='left')

思路1:线性回归

from sklearn.linear_model import LinearRegression from sklearn.metrics import mean_absolute_percentage_error from sklearn.feature_extraction.text import CountVectorizer import jieba

model = LinearRegression()

model.fit(

train_data.iloc[train_idx].drop(['weiboid', 'content', 'commentNum', 'retweetNum', 'likeNum'], axis=1),

train_commentNum

)

pred_commentNum = model.predict(train_data.iloc[valid_idx].drop(['weiboid', 'content', 'commentNum', 'retweetNum', 'likeNum'], axis=1))

print('commentNum MAPE:', mean_absolute_percentage_error(pred_commentNum, valid_commentNum))

model.fit(

train_data.iloc[train_idx].drop(['weiboid', 'content', 'commentNum', 'retweetNum', 'likeNum'], axis=1),

train_retweetNum

)

pred_retweetNum = model.predict(train_data.iloc[valid_idx].drop(['weiboid', 'content', 'commentNum', 'retweetNum', 'likeNum'], axis=1))

print('retweetNum MAPE:', mean_absolute_percentage_error(pred_retweetNum, valid_retweetNum))

model.fit(

train_data.iloc[train_idx].drop(['weiboid', 'content', 'commentNum', 'retweetNum', 'likeNum'], axis=1),

train_likeNum

)

pred_likeNum = model.predict(train_data.iloc[valid_idx].drop(['weiboid', 'content', 'commentNum', 'retweetNum', 'likeNum'], axis=1))

print('likeNum MAPE:', mean_absolute_percentage_error(pred_likeNum, valid_likeNum))

commentNum MAPE: 1.7469178890641088 retweetNum MAPE: 1.2269386458093727 likeNum MAPE: 1.5407936805042253

思路2:决策树

from sklearn.tree import DecisionTreeRegressor

model = DecisionTreeRegressor(max_depth=6)

model.fit(

train_data.iloc[train_idx].drop(['weiboid', 'content', 'commentNum', 'retweetNum', 'likeNum'], axis=1),

train_commentNum

)

pred_commentNum = model.predict(train_data.iloc[valid_idx].drop(['weiboid', 'content', 'commentNum', 'retweetNum', 'likeNum'], axis=1))

print('commentNum MAPE:', mean_absolute_percentage_error(pred_commentNum, valid_commentNum))

model.fit(

train_data.iloc[train_idx].drop(['weiboid', 'content', 'commentNum', 'retweetNum', 'likeNum'], axis=1),

train_retweetNum

)

pred_retweetNum = model.predict(train_data.iloc[valid_idx].drop(['weiboid', 'content', 'commentNum', 'retweetNum', 'likeNum'], axis=1))

print('retweetNum MAPE:', mean_absolute_percentage_error(pred_retweetNum, valid_retweetNum))

model.fit(

train_data.iloc[train_idx].drop(['weiboid', 'content', 'commentNum', 'retweetNum', 'likeNum'], axis=1),

train_likeNum

)

pred_likeNum = model.predict(train_data.iloc[valid_idx].drop(['weiboid', 'content', 'commentNum', 'retweetNum', 'likeNum'], axis=1))

print('likeNum MAPE:', mean_absolute_percentage_error(pred_likeNum, valid_likeNum))

commentNum MAPE: 4.9309003994933 retweetNum MAPE: 3.6300008142097706 likeNum MAPE: 2.0723876497611156

思路3:LightGBM

from lightgbm import LGBMRegressor

model = LGBMRegressor(max_depth=6, n_estimators=100)

model.fit(

train_data.iloc[train_idx].drop(['weiboid', 'content', 'commentNum', 'retweetNum', 'likeNum'], axis=1),

train_commentNum

)

pred_commentNum = model.predict(train_data.iloc[valid_idx].drop(['weiboid', 'content', 'commentNum', 'retweetNum', 'likeNum'], axis=1))

print('commentNum MAPE:', mean_absolute_percentage_error(pred_commentNum, valid_commentNum))

model.fit(

train_data.iloc[train_idx].drop(['weiboid', 'content', 'commentNum', 'retweetNum', 'likeNum'], axis=1),

train_retweetNum

)

pred_retweetNum = model.predict(train_data.iloc[valid_idx].drop(['weiboid', 'content', 'commentNum', 'retweetNum', 'likeNum'], axis=1))

print('retweetNum MAPE:', mean_absolute_percentage_error(pred_retweetNum, valid_retweetNum))

model.fit(

train_data.iloc[train_idx].drop(['weiboid', 'content', 'commentNum', 'retweetNum', 'likeNum'], axis=1),

train_likeNum

)

pred_likeNum = model.predict(train_data.iloc[valid_idx].drop(['weiboid', 'content', 'commentNum', 'retweetNum', 'likeNum'], axis=1))

print('likeNum MAPE:', mean_absolute_percentage_error(pred_likeNum, valid_likeNum))

commentNum MAPE: 4.259334507873504 retweetNum MAPE: 2.203757388911284 likeNum MAPE: 1.2835411910087355

总结与展望

本次项目中我们对微博数据进行了特征提取 + 模型搭建,并尝试了多个机器学习模型。数据集特征包含了多种类型,如类别、数值、文本和时间,因此需要考虑从多角度进行特征提取。同时需要考虑到用户与微博的关系,以及微博内容特征。

后续可以从以下几个角度进行改进:

- 加入文本特征,如TFIDF或词向量嵌入

- 使用深度学习和多模态进行训练

- 使用多折交叉验证进行训练